Teachers and Examiners (CBSESkillEduction) collaborated to create the Natural Language Processing Class 10 Questions and Answers. All the important MCQs are taken from the NCERT Textbook Artificial Intelligence ( 417 ) class X.

Natural Language Processing Class 10 Questions and Answers

1. What do you mean by Natural Language Processing?

Answer – The area of artificial intelligence known as natural language processing, or NLP, is dedicated to making it possible for computers to comprehend and process human languages. The interaction between computers and human (natural) languages is the focus of artificial intelligence (AI), a subfield of linguistics, computer science, information engineering, and artificial intelligence. This includes learning how to programme computers to process and analyze large amounts of natural language data.

2. What are the different applications of NLP which are used in real-life scenario?

Answer – Some of the applications which is used in the real-life scenario are –

a. Automatic Summarization – Automatic summarization is useful for gathering data from social media and other online sources, as well as for summarizing the meaning of documents and other written materials. When utilized to give a summary of a news story or blog post while eliminating redundancy from different sources and enhancing the diversity of content acquired, automatic summarizing is particularly pertinent.

b. Sentiment Analysis – In posts when emotion is not always directly expressed, or even in the same post, the aim of sentiment analysis is to detect sentiment. To better comprehend what internet users are saying about a company’s goods and services, businesses employ natural language processing tools like sentiment analysis.

c. Text Classification – Text classification enables you to classify a document and organize it to make it easier to find the information you need or to carry out certain tasks. Spam screening in email is one example of how text categorization is used.

d. Virtual Assistants – These days, digital assistants like Google Assistant, Cortana, Siri, and Alexa play a significant role in our lives. Not only can we communicate with them, but they can also facilitate our life. They can assist us in making notes about our responsibilities, making calls for us, sending messages, and much more by having access to our data.

Natural Language Processing Class 10 Questions and Answers

3. What is Cognitive Behavioural Therapy (CBT)?

Answer – One of the most effective ways to deal with stress is cognitive behavioural therapy (CBT), which is popular since it is simple to apply to people and produces positive outcomes. Understanding a person’s behaviour and mentality in daily life is part of this therapy. Therapists assist clients in overcoming stress and leading happy lives with the aid of CBT.

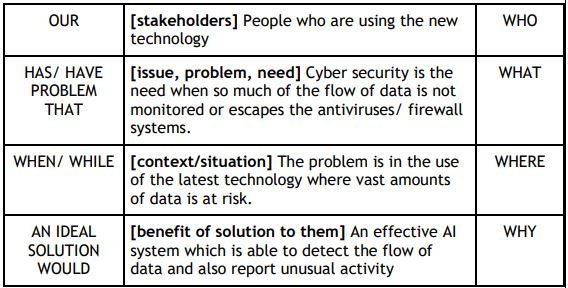

4. What is Problem Scoping?

Answer – Understanding a problem and identifying numerous elements that have an impact on it help define the project’s purpose or objective. Who, What, Where, and Why are the 4Ws of problem scoping. These Ws make it easier and more effective to identify and understand the problem.

5. What is Data Acquisition?

Answer – We need to gather conversational data from people in order to decipher their setements and comprehend their meaning in order to grasp their feelings. This collection of information is known as Data Acquistion. Such information can be gathered in a variety of ways –

a. Surveys

b. Observing the therapist’s sessions

c. Databased available on the internet

Natural Language Processing Class 10 Questions and Answers

6. What is Data Exploration?

Answer – Once the textual information has been gathered using Data Acquistition, it must be cleaned up and processed before being delivered to the machine in a simpler form. As a result, the text is normalised using a number of processes, and the vocabulary is reduced to a minimum because the computer just needs the text’s main ideas rather than its grammar.

7. What is Data Modelling?

Answer – After the text has been normalised, an NLP-based AI model is then fed the data. Keep in mind that in NLP, data pre-processing is only necessary after which the data is supplied to the computer. There are numerous AI models that can be used, depending on the kind of chatbot we’re trying to create, to help us lay the groundwork for our project.

8. What is Data Evaluation?

Answer – The correctness of the trained model is determined based on how well the machine-generated answers match the user’s input is knwon as Data Evaluation. The chatbot’s proposed answers are contrasted with the correct answers to determine the model’s efficacy.

Natural Language Processing Class 10 Questions and Answers

9. What is Chatbot?

Answer – A chatbot is a piece of software or an agent with artificial intelligence that uses natural language processing to mimic a conversation with users or people. You can have the chat through a website, application, or messaging app. These chatbots, often known as digital assistants, can communicate with people verbally or via text.

The majority of organizations utilize AI chatbots, such the Vainubot and HDFC Eva chatbots, to give their clients virtual customer assistance around-the-clock.

Some of the example of Chatbot –

a. Mitsuku Bot

b. CleverBot

c. Jabberwacky

d. Haptik

e. Rose

f. Ochtbot

Natural Language Processing Class 10 Questions and Answers

10. Types of Chatbot?

Answer – There are two types of Chatbot –

a. Script Bot – An Internet bot, sometimes known as a web robot, robot, or simply bot, is a software programme that does automated operations (scripts) over the Internet, typically with the aim of simulating extensive human online activity like communicating.

b. Smart Bot – An artificial intelligence (AI) system that can learn from its surroundings and past experiences and develop new skills based on that knowledge is referred to as a smart bot. Smart bot that are intelligent enough can operate alongside people and learn from their actions.

11. Difference between human language vs computer language?

Answer – Although there is a significant difference between the languages, human language and computer language can be translated into one other very flawlessly. Human languages can be used in voice, writing, and gesture, whereas machine-based languages can only be used in written communication. A computer’s textual language can communicate with vocal or visual clues depending on the situation, as in AI chatbots with procedural animation and speech synthesis. But in the end, language is still written. The languages also have different meanings. Human languages are utilized in a variety of circumstances, including this blog post, whereas machine languages are almost solely used for requests, commands, and logic.

Natural Language Processing Class 10 Questions and Answers

12. What do you mean by Multiple Meanings of a word in Deep Learning?

Answer – Depending on the context, the term mouse can be used to refer to either a mammal or a computer device. Consequently, mouse is described as ambiguous. The Principle of Economical Versatility of Words states that common words have a tendency to acquire additional senses, which can create practical issues in subsequent jobs. Additionally, this meaning conflation has additional detrimental effects on correct semantic modelling, such as the pulling together in the semantic space of words that are semantically unrelated yet are comparable to distinct meanings of the same word.

13. What is Data Processing?

Answer – Making data more meaningful and informative is the effort of changing it from a given form to one that is considerably more useable and desired. This entire process can be automated using Machine Learning algorithms, mathematical modelling, and statistical expertise.

14. What is Text Normalisation?

Answer – The process of converting a text into a canonical (standard) form is known as text normalisation. For instance, the canonical form of the word “good” can be created from the words “gooood” and “gud.” Another case is the reduction of terms that are nearly identical, such as “stopwords,” “stop-words,” and “stop words,” to just “stopwords.”

We must be aware that we will be working on a collection of written text in this portion before we start. As a result, we will be analysing text from a variety of papers. This collection of text from all the documents is referred to as a corpus. We would perform each stage of Text Normalization and test them on a corpus in addition to going through them all.

Natural Language Processing Class 10 Questions and Answers

15. What is Sentence Segmentation in AI?

Answer – The challenge of breaking down a string of written language into its individual sentences is known as sentence segmentation. The method used in NLP to determine where sentences actually begin and end, or you can just say that this is how we divide a text into sentences. Sentence segmentation is the process in question. Using the spacy library, we implement this portion of NLP in Python.

16. What is Tokenisation in AI?

Answer – The challenge of breaking down a string of written language into its individual words is known as word tokenization (also known as word segmentation). Space is a good approximation of a word divider in English and many other languages that use some variation of the Latin alphabet.

17. What is purpose of Stopwords?

Answer – Stopwords are words that are used frequently in a corpus but provide nothing useful. Humans utilize grammar to make their sentences clear and understandable for the other person. However, grammatical terms fall under the category of stopwords because they do not add any significance to the information that is to be communicated through the statement. Stopword examples include –

a/ an/ and/ are/ as/ for/ it/ is/ into/ in/ if/ on/ or/ such/ the/ there/ to

Natural Language Processing Class 10 Questions and Answers

18. What is Stemming in AI?

Answer – The act of stripping words of their affixes and returning them to their original forms is known as stemming. The process of stemming can be carried out manually or by an algorithm that an AI system may use. Any inflected form that is encountered can be reduced to its root by using a variety of stemming techniques. A stemming algorithm can be created easily.

19. What is Lemmatization?

Answer – Stemming and lemmatization are alternate techniques to one another because they both function to remove affixes. However, lemmatization differs from both of them in that the word that results from the elimination of the affix (also known as the lemma) is meaningful.

Lemmatization takes more time to complete than stemming because it ensures that the lemma is a word with meaning.

Natural Language Processing Class 10 Questions and Answers

20. What is bag of Words?

Answer – Bag of Words is a model for natural language processing that aids in removing textual elements that can be used by machine learning techniques. We obtain each word’s occurrences from the bag of words and create the corpus’s vocabulary.

An approach to extracting features from text for use in modelling, such as with machine learning techniques, is known as a bag-of-words model, or BoW for short. The method is really straightforward and adaptable, and it may be applied in a variety of ways to extract features from documents.

21. What is TFIDF?

Answer – TF-IDF, which stands for term frequency-inverse document frequency, is a metric that is employed in the fields of information retrieval (IR) and machine learning to quantify the significance or relevance of string representations (words, phrases, lemmas, etc.) in a document among a group of documents (also known as a corpus).

22. What are the different applications of TFIDF?

Answer – TFIDF is commonly used in the Natural Language Processing domain. Some of its applications are:

a. Document classification -Helps in classifying the type and genre of a document.

b. Topic Modelling – It helps in predicting the topic for a corpus.

c. Information Retrieval System – To extract the important information out of a corpus.

d. Steop word filtering – Helps in removing the unnecessary words out of a text body.

Natural Language Processing Class 10 Questions and Answers

23. Write any two TFIDF application?

Answer – 1. Document Classification – Helps in classifying the type and genre of a document.

2. Topic Modelling – It helps in predicting the topic for a corpus.

3. Information Retrieval System – To extract the important information out of a corpus.

4. Stop word filtering – Helps in removing the unnecessary words out of a text body.

24. Write the steps necessary to implement the bag of words algorithm.

Answer – The steps to implement bag of words algorithm are as follows:

1. Text Normalisation: Collect data and pre-process it

2. Create Dictionary: Make a list of all the unique words occurring in the corpus.

3. Create document vectors: For each document in the corpus, find out how many times the word from the unique list of words has occurred.

4. Create document vectors for all the documents.

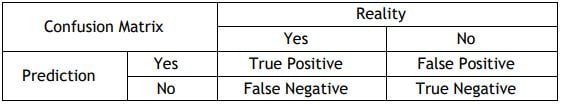

25. What is the purpose of confusion matrix? What does it serve?

Answer – The comparison between the prediction and reality’s outcomes is stored in the confusion matrix. We can determine variables like recall, precision, and F1 score, which are used to assess an AI model’s performance, from the confusion matrix.

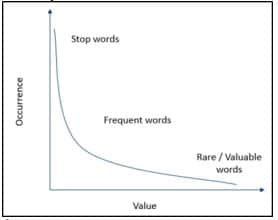

26. How does the relationship between a word’s value and frequency in a corpus look like in the given graph?

Answer – The graph demonstrates the inverse relationship between word frequency and word value. The most frequent terms, such as stop words, are of little significance. The value of words increases as their frequency decreases. These words are referred to as precious or uncommon words. The least frequently occurring but most valuable terms in the corpus are those.

27. In data processing, define the term “Text Normalization.”

Answer – Text normalisation is the initial step in the data processing process. Text normalisation assists in reducing the complexity of the textual data to a point where it is comparable to the actual data. To lower the text’s normalisation level in this, we go through numerous procedures. We work with text from several sources, and the collective textual data from all the papers is referred to as a corpus.

Natural Language Processing Class 10 Questions and Answers

28. Explain the differences between lemmatization and stemming. Give an example to assist you explain.

Answer – Stemming is the process of stripping words of their affixes and returning them to their original form.

After the affix is removed during lemmatization, we are left with a meaningful word known as a lemma. Lemmatization takes more time to complete than stemming because it ensures that the lemma is a word with meaning.

The following example illustrates the distinction between stemming and lemmatization:

Caring >> Lemmatization >> Care

Caring >> Stemming >> Car

Natural Language Processing Class 10 Questions and Answers

29. Imagine developing a prediction model based on AI and deploying it to monitor traffic congestion on the roadways. Now, the model’s goal is to foretell whether or not there will be a traffic jam. We must now determine whether or not the predictions this model generates are accurate in order to gauge its efficacy. Prediction and Reality are the two conditions that we need to consider.

Today, traffic jams are a regular occurrence in our life. Every time you get on the road when you live in an urban location, you have to deal with traffic. Most pupils choose to take buses to school. Due to these traffic bottlenecks, the bus frequently runs late, making it impossible for the pupils to get to school on time.

Create a Confusion Matrix for the aforementioned scenario while taking into account all potential outcomes.

Answer –

Case 1: Is there a traffic Jam?

Prediction: Yes Reality: Yes

True Positive

Case 2: Is there a traffic Jam?

Prediction: No Reality: No

True Negative

Case 3: Is there a traffic Jam?

Prediction: Yes Reality: No

False Positive

Case 4: Is there a traffic Jam?

Prediction: No Reality: Yes

False Negative

Natural Language Processing Class 10 Questions and Answers

30. Make a 4W Project Canvas.

Risks will become more concentrated in a single network as more and more innovative technologies are used. In such cases, cybersecurity becomes incredibly complex and is no longer under the authority of firewalls. It won’t be able to recognise odd behaviour patterns, including data migration.

Consider how AI systems can sift through voluminous data to find user behaviour that is vulnerable. To explicitly define the scope, the method of data collection, the model, and the evaluation criteria, use an AI project cycle.

Answer –

Employability skills Class 10 Notes

- Unit 1- Communication Skills Class 10 Notes

- Unit 2- Self-Management Skills Class 10 Notes

- Unit 3- Basic ICT Skills Class 10 Notes

- Unit 4- Entrepreneurial Skills Class 10 Notes

- Unit 5- Green Skills Class 10 Notes

Employability skills Class 10 MCQ

- Unit 1- Communication Skills Class 10 MCQ

- Unit 2- Self-Management Skills Class 10 MCQ

- Unit 3- Basic ICT Skills Class 10 MCQ

- Unit 4- Entrepreneurial Skills Class 10 MCQ

- Unit 5- Green Skills Class 10 MCQ

Employability skills Class 10 Questions and Answers

- Unit 1- Communication Skills Class 10 Questions and Answers

- Unit 2- Self-Management Skills Class 10 Questions and Answers

- Unit 3- Basic ICT Skills Class 10 Questions and Answers

- Unit 4- Entrepreneurial Skills Class 10 Questions and Answers

- Unit 5- Green Skills Class 10 Questions and Answers

Artificial Intelligence Class 10 Notes

- Unit 1 – Introduction to Artificial Intelligence Class 10 Notes

- Unit 2 – AI Project Cycle Class 10 Notes

- Unit 3 – Natural Language Processing Class 10 Notes

- Unit 4 – Evaluation Class 10 Notes

- Advanced Python Class 10 Notes

- Computer Vision Class 10 Notes

Artificial Intelligence Class 10 MCQ

- Unit 1 – Introduction to Artificial Intelligence Class 10 MCQ

- Unit 2 – AI Project Cycle Class 10 MCQ

- Unit 3 – Natural Language Processing Class 10 MCQ

- Unit 4 – Evaluation Class 10 MCQ

Artificial Intelligence Class 10 Questions and Answers

- Unit 1 – Introduction to Artificial Intelligence Class 10 Questions and Answers

- Unit 2 – AI Project Cycle Class 10 Questions and Answers

- Unit 3 – Natural Language Processing Class 10 Questions and Answers

- Unit 4 – Evaluation Class 10 Questions and Answers