Data Literacy Class 11 AI Notes – The CBSE has updated the syllabus for St. XI (Code 843). The new notes are made based on the updated syllabus and based on the updated CBSE textbook. All the important information is taken from the Artificial Intelligence Class XI Textbook Based on the CBSE Board Pattern.

Data Literacy Class 11 AI Notes

What is data literacy?

Data is a representation of facts or instructions about an entity that can be processed or conveyed by a human or a machine, such as numbers, text, pictures, audio clips, videos, and so on. Data literacy means being able to find and use data effectively. This includes skills like collecting data, organizing it, checking its quality, analysing it, understanding the results and using it ethically.

Data collection

Data collection is important for machine learning models; data collection helps to identify the patterns and make correct predictions. Data can be collected from multiple sources, both online and offline, using scraping, capturing, and loading.

The amount of data required for the models depends on the complexity of the machine learning model. Simple models required less data, and complex models required quality data. Before collecting the data, the data scientists identify the source from where the data will be collected based on the model requirement. The data can be collected from two different sources—

- Primary Data Source: A primary data source is a firsthand source where the data can be collected from surveys, experiments, or direct observations.

- Secondary Data: The secondary data source depends on existing sources like databases, research papers, or reports.

Primary Sources are sources which are created to collect the data for analysis. Some of the examples are given below

| Method | Description | Example |

|---|---|---|

| Survey | Gathering data from a population through interviews, questionnaires, or online forms. Useful for measuring opinions, behaviors, and demographics. | A researcher uses a questionnaire to understand consumer preferences for a new product. |

| Interview | Direct communication with individuals or groups to gather information. It can be structured, semi-structured, or unstructured. | An organization conducts an online survey to collect employee feedback about job satisfaction. |

| Observation | Watching and recording behaviors or events as they occur. Often used in ethnographic research or when direct interaction is not possible. | Observing children’s play patterns in a schoolyard to understand social dynamics. |

| Experiment | Manipulating variables to observe their effects on outcomes. Used to establish cause-and-effect relationships. | Testing the effectiveness of different advertising campaigns on a group of people. |

| Marketing Campaign (using data) | Utilizing customer data to predict behavior and optimize campaign performance. | A company personalizes email marketing campaigns based on past customer purchases. |

| Questionnaire | A specific tool used within surveys – a list of questions designed to gather data from respondents. You can collect quantitative (numerical) or qualitative (descriptive) information. | A questionnaire might ask respondents to rate their satisfaction on a scale of 1 to 5 and also provide open-ended feedback. |

Secondary data sources are where the data is already stored and ready for use. Data given in Books, Journals, News Papers, Websites, Internal transactional databases etc. can be reused for data analysis. Some methods of collecting secondary data are:

| Method | Description | Example |

|---|---|---|

| Social Media Data Tracking | Collecting data from social media platforms like user posts, comments, and interactions. | Analyzing social media sentiment to understand audience reception towards a new product launch. |

| Web Scraping | Using automated tools to extract specific content and data from websites. | Scraping product information and prices from e-commerce websites for price comparison. |

| Satellite Data Tracking | Gathering information about the Earth’s surface and atmosphere using satellites. | Monitoring weather patterns and environmental changes using satellite imagery. |

| Online Data Platforms | Websites offering pre-compiled datasets for various purposes. | Kaggle, GitHub etc. |

Exploring data

Data exploration is an initial step in data analysis that helps to analyze and visualize data using statistical methods. Exploring data is about “getting to know” the data and its values.

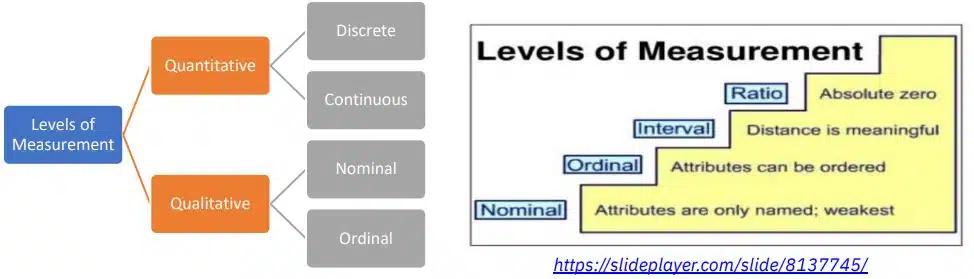

Levels of measurement

The way a set of data is measured is called the level of measurement. Not all data can be treated equally. It makes sense to classify datasets based on different criteria. Some are quantitative, and some are qualitative. Some datasets are continuous, and some are discrete. Qualitative data can be nominal or ordinal. And quantitative data can be split into two groups: interval and ratio.

Types of Data

Data can be classified according to level of measurement. The level of measurement dictates the calculation that can be done to summarize and present the data. It also determines the statistical test that should be performed.

- Norminal

- Ordinal

- Interval

- Ratio

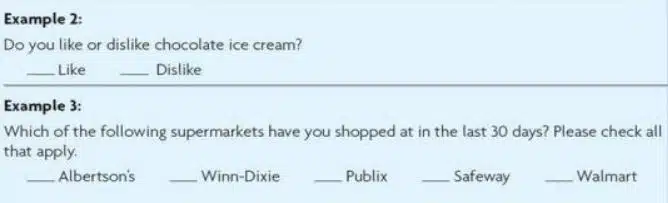

1. Nominal Level

Nominal data is qualitative data that groups variables into categories. This type of data cannot be used in calculations or in any order or rank., like colors of eyes, yes or no responses to a survey, gender (male and female), smartphone companies, etc. Nominal data have a straightforward type of measurement. The nominal level of measurement is the simplest or lowest of the four ways to characterize data. Nominal means “in name only.”.

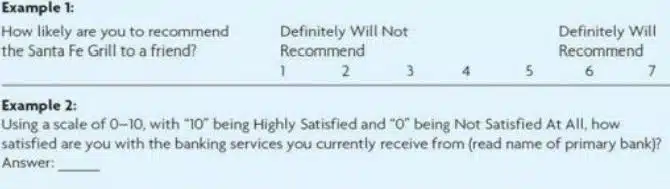

2. Ordinal Level

Ordinal level in data refers to a type of measurement scale where data categories are ranked in a specific order, but ordinal scale data cannot be used in calculations. Ordinal data is made up of groups and categories that follow a strict order. For example, if you have been asked to rate a meal at a restaurant on a scale of 1 to 10 or a movie rating, etc.

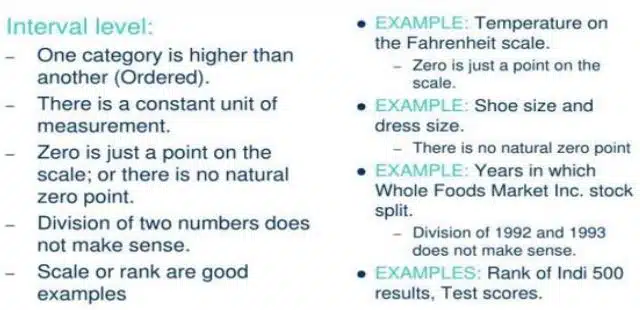

3. Interval Level

An interval scale is one where there is an order and the difference between two values has a meaning. These distances between two values are known as intervals, and there is no zero in the interval scale. Data that is measured using the interval scale is similar to ordinal level data because it has a definite ordering, but in interval level there is a difference between the two data. For example, temperature scales like Celsius and Fahrenheit are measured by using the interval scale where “0” degrees does not mean “no temperature” at all.

4. Ratio Scale Level

Ratio scale data is like interval scale data, but it has a 0 point and ratios can be calculated. For example, the scores of four multiple choice statistics final exam questions were recorded as 80, 68, 20 and 92 (out of a maximum of 100 marks). The grades are computer generated. The data can be put in order from lowest to highest: 20, 68, 80, 92 or vice versa. The differences between the data have meaning. The score 92 is more than the score 68 by 24 points. Ratios can be calculated. The smallest score is 0. So, 80 is four times 20.

The score of 80 is four times better than the score of 20. So, we can add, subtract, divide and multiply the two ratio level variables. Egg: Weight of a person. It has a real zero point, i.e. zero weight means that the person has no weight. Also, we can add, subtract, multiply and divide weights at the real scale for comparisons.

Statistical analysis of data

What is central tendency?

The central tendency is a mathematical technique that helps to identify a single value that represents the middle of the data.

Definition: “Central tendency” is stated as the summary of a dataset in a single value that represents the entire distribution of the data domain (or dataset).

Measure of Central Tendency

Statistics is the science of data, which is, in fact, a collection of mathematical techniques that helps to extract information from data. Usually, statistics deals with large datasets, and central tendency is used for the understanding and analysis purpose of data.

We can perform Statistical Analysis using Python programming language. For that we have to import the library statistics into the Program. Some important functions which we will use in future programs in this module are

- mean ( ) -> returns the mean of the data

- median ( ) -> returns the median of the data

- mode ( ) -> returns the mode of the data

- variance ( ) -> returns the variance of the data

- stdev ( ) -> returns the standard deviation of the data

1. Mean

The mean is the average of a set of numbers. To find the mean value, first add all numbers in the data set and divide by the number of values in the set.

M = ∑ fx / n

where M = Mean

∑ = Sum total of the scores

f = Frequency of the distribution

x = Scores

n = Total number of cases

Example,

Calculate the mean of the following grouped data

| Class | Frequency |

|---|---|

| 2 – 4 | 3 |

| 4 – 6 | 4 |

| 6 – 8 | 2 |

| 8 – 10 | 1 |

| Class | Frequency | Mid Value (x) | f.x |

|---|---|---|---|

| 2 – 4 | 3 | 3 | 9 |

| 4 – 6 | 4 | 5 | 20 |

| 6 – 8 | 2 | 7 | 14 |

| 8 – 10 | 1 | 9 | 9 |

| n = 10 | ∑ f.x = 52 |

Mean (M) = ∑ fx / n

= 52 / 10

= 5.2

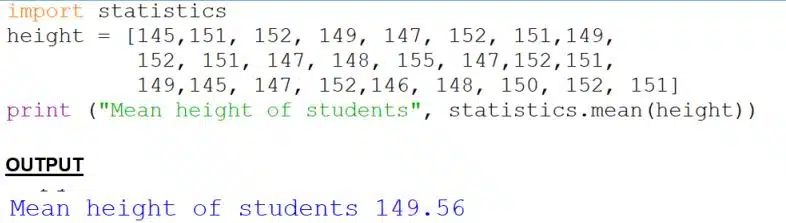

Using python

There are 25 students in a class. Their heights are given below. Write a Python Program to find the mean.

Heights -> 145, 151, 152, 149, 147, 152, 151,149, 152, 151, 147, 148, 155, 147,152,151, 149,145, 147, 152,146, 148, 150, 152, 151

2. Median

The median is another measure of central tendency. It is positional value of the variables which divides the group into two equal parts, one part comprising all values greater than median and other part smaller than median.

Example,

Following series shows marks in mathematics of students learning AI

| 17 | 32 | 35 | 15 | 21 | 41 | 32 | 11 | 10 | 20 | 27 | 28 | 30 |

We arrange this data in an ascending or descending order.

| 10 | 11 | 15 | 17 | 20 | 21 | 27 | 28 | 30 | 32 | 32 | 35 | 40 |

As 27 is in the middle of this data position wise, therefore Median = 27

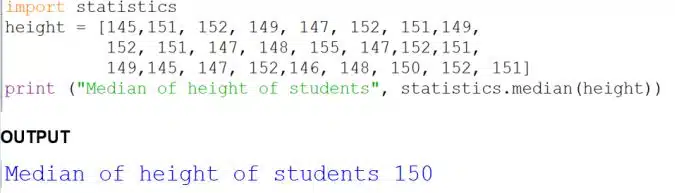

Python program,

There are 25 students in a class. Their heights are given below. Write a Python Program to find the median.

Heights -> 145, 151, 152, 149, 147, 152, 151,149, 152, 151, 147, 148, 155, 147,152,151,149,145, 147, 152,146, 148, 150, 152, 151

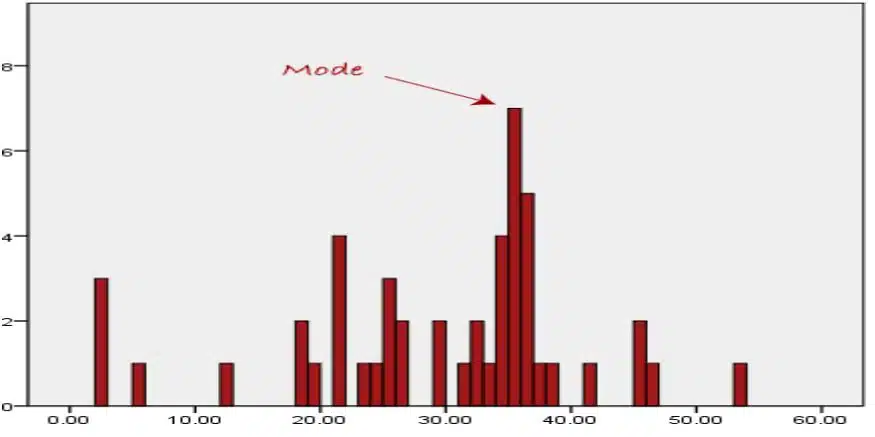

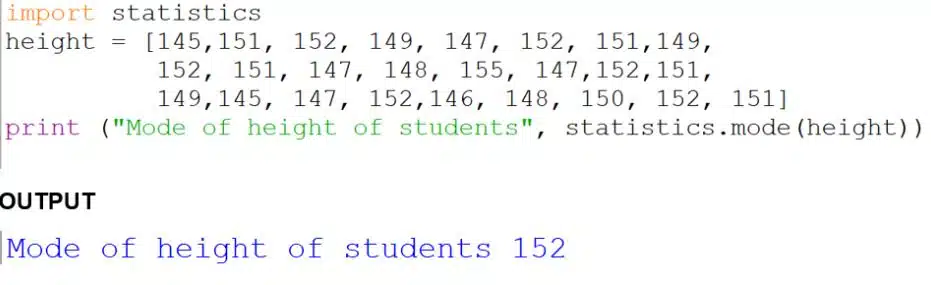

3. Mode

Mode is another important measure of central tendency of statistical series. It is the value which occurs most frequently in the data series. It represents the highest bar in a bar chart or histogram. An example of a mode is presented below:

Example,

Age of 15 students of a class

Age (years) 22, 24, 17, 18, 17, 19, 18, 21, 20, 21, 20, 23, 22, 22, 22,22,21,24

- We arrange this series in ascending order as 17,17,18,18,19,20,20,21,21,22,22,22,

- An inspection of the series shows that 22 occurs most frequently, hence

Python program,

Write a program to find the mode

Heights -> 145,151, 152, 149, 147, 152, 151,149, 152, 151, 147, 148, 155, 147,152,151,149, 145, 147, 152,146, 148, 150, 152, 151

When do we use mean, median and mode:

| Mean | Median | Mode |

|---|---|---|

| The mean is a good measure of the central tendency when a data set contains values that are relatively evenly spread with no exceptionally high or low values. | The median is a good measure of the central value when the data include exceptionally high or low values. The median is the most suitable measure of average for data classified on an ordinal scale. | Mode is used when you need to find the distribution peak and peak may be many. For example, it is important to print more of the most popular books; because printing different books in equal numbers would cause a shortage of some books and an oversupply of others. |

Variance and Standard Deviation

Variance and standard deviation are both statistical measurements. Variance is a measure of how data points vary from the mean, while standard deviation is a square root of the variance. Variance and standard deviation are helpful when we want to find the central value of the dataset.

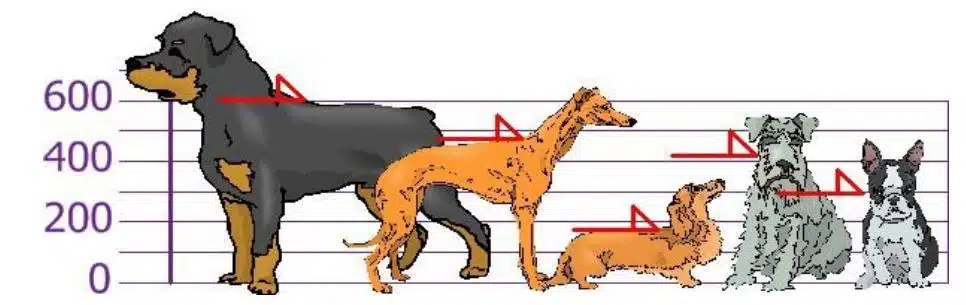

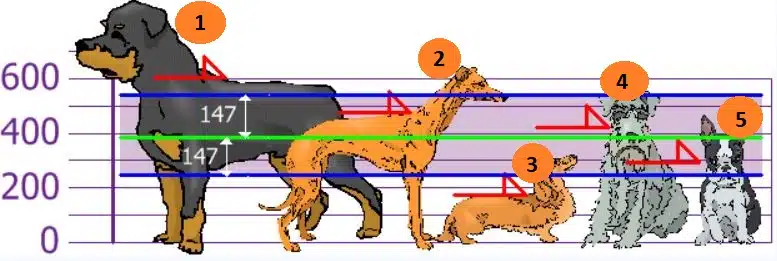

Let’s see one example of 5 dogs. I want to find which dog is tall and which dog is short in height.

Step 1: Measure the height (at the shoulder) of 5 dogs (in millimetres)

As you can see, their heights are: 600mm, 470mm, 170mm, 430mm and 300mm.

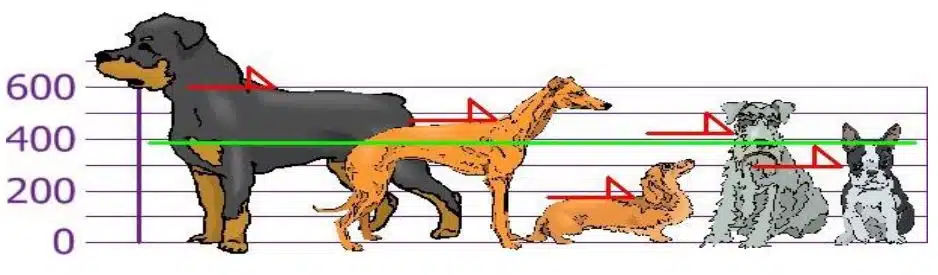

Let us calculate their mean,

Mean = (600 + 470 + 170 + 430 + 300) / 5 = 1970 / 5 = 394 mm

Step 2: Now let us plot again after taking mean height (The green Line)

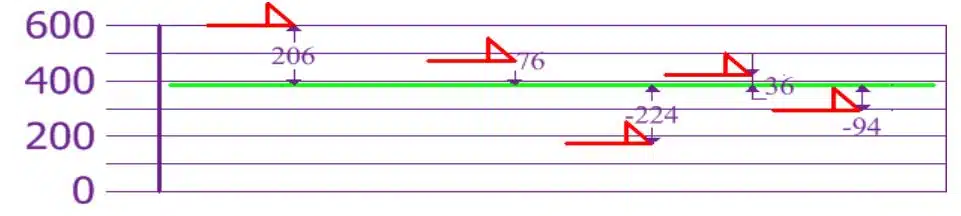

Step 3: Now, let us find the deviation of dogs’ height from the mean height

Calculate the difference (from mean height), square them, and find the average. This average is the value of the variance.

Variance = [ (206) 2 + (76) 2 + (-224) 2 + (36) 2 + (-94) 2] / 5 = 108520 / 5 = 21704

And standard deviation is the square root of the variance.

Standard deviation = √???????????????????? = 147.32

Step 4: Now we can identify that the first dog’s height is above the standard division and the third dog’s height is lower than the standard division.

Some important facts about variance and standard deviation

- A small variance indicates that the data points tend to be very close to the mean, and to each other.

- A high variance indicates that the data points are very spread out from the mean, and from one another.

- A low standard deviation indicates that the data points tend to be very close to the mean.

- A high standard deviation indicates that the data points are spread out over a large range of values.

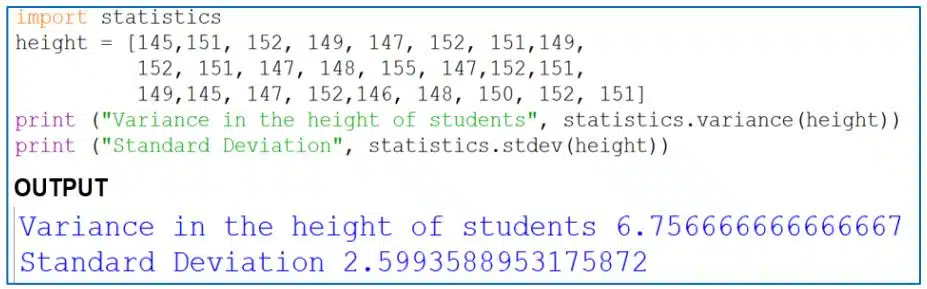

Python program to find variance and standard deviation

Write a program to find the variance and standard deviation.

Heights -> 145, 151, 152, 149, 147, 152, 151, 149, 152, 151, 147, 148, 155, 147, 152, 151, 149, 145, 147, 152, 146, 148, 150, 152, 151

Representation of Data

Representation of data helps to summarize a large amount of data in a simple format that is compact and produces meaningful information. Representation of data is a technique for presenting large volumes of data in such a manner that enables the user to interpret the important data with minimum effort and time. Data representation techniques are broadly classified in two ways:

- Non-Graphical technique: This is the old format of data, which is presented in tabular format and not suitable for large datasets. Non-graphical techniques are not so suitable when our objective is to make some decisions after analyzing a set of data.

- Graphical Technique: The visual display of statistical data in the form of points, lines, dots, and other geometrical forms is most common. A graphical technique is easier for the human brain to deal with large datasets.

Matplotlib in python

Matplotlib is a popular data visualization library in Python for creating bar charts, scatter plots, histograms, line charts, etc., just using a few lines of code.

Some of the common functions and its description is given below

| Function Name | Description |

|---|---|

| title ( ) | Adds title to the chart/graph |

| xlabel ( ) | Sets label for X-axis |

| ylabel ( ) | Sets label for Y-axis |

| xlim ( ) | Sets the value limit for X-axis |

| ylim( ) | Sets the value limit for Y-axis |

| xticks ( ) | Sets the tick marks in X-axis |

| yticks( ) | Sets the tick marks in Y-axis |

| show ( ) | Displays the graph in the screen |

| savefig(“address”) | Saves the graph in the address specified as argument. |

| figure ( figsize = value in tuple format) | Determines the size of the plot in which the graph is drawn. Values should be supplied in tuple format to the attribute figsize which is passed as argument. |

List of Markers and its descriptions:

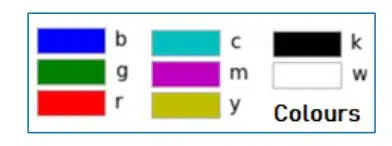

List of Graph Colour Codes:

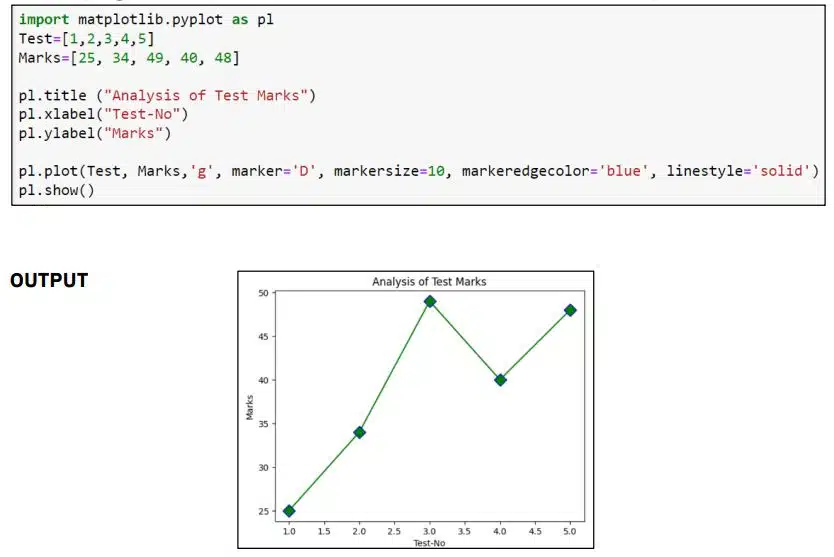

1. Line Graph

A line graph is a powerful tool used to represent continuous data along a numbered axis. It allows us to visualize trends and changes in data points over time.

Python program,

Write a program to draw a line chart, we use plot function.

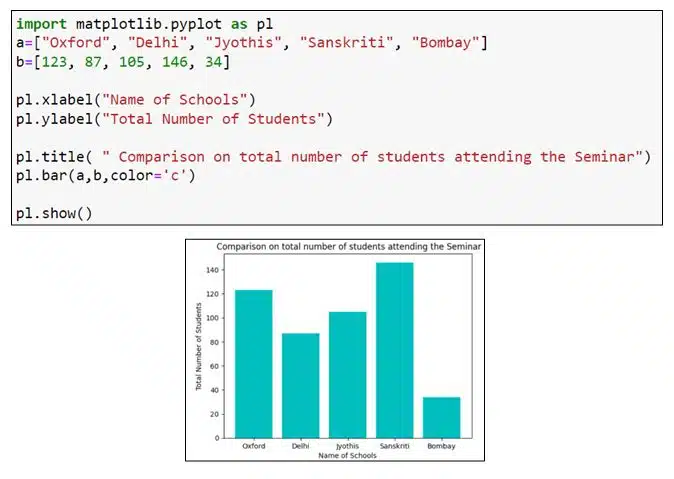

2. Bar Graph

A bar chart or bar graph is a graph that presents categorical data with rectangular bars with heights or lengths proportional to the values that they represent. Bar chart is used to show comparison between different categories.

Python program,

Create a bar graph to illustrate the distribution of students from various schools who attended a seminar on “Deep Learning”. The total number of students from each school is provided below.

| Oxford Public School | Delhi Public School | Jyothis Central School | Sanskriti School | Bombay Public School |

|---|---|---|---|---|

| 123 | 87 | 105 | 146 | 34 |

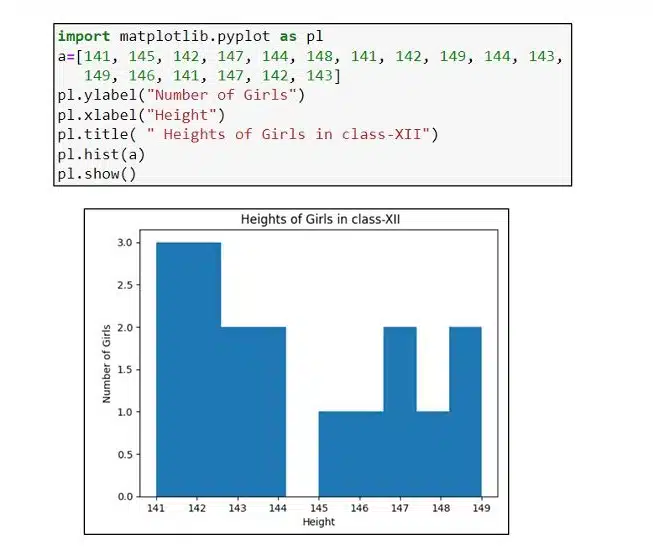

3. Histogram

Histograms are graphical representations of data distribution, with vertical rectangles depicting the frequencies of different value ranges. The histogram can summarize discrete or continuous data that are measured on an interval scale.

Python program,

Given a dataset containing the heights of girls in class XII, construct a histogram to visualize the distribution of heights.

141, 145, 142, 147, 144, 148, 141, 142, 149, 144, 143, 149, 146, 141, 147, 142, 143

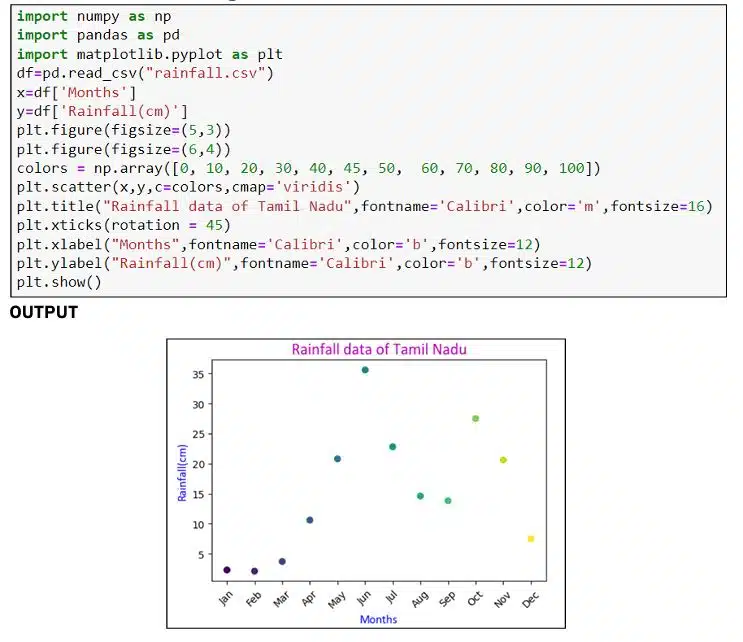

4. Scatter Graph

Scatter plots visually represent relationships between two variables by plotting data points along both the x and y axes. Their strength lies in their ability to clearly depict trends, clusters, and relationships within datasets.

Python program,

Write a program to draw a scatter chart to visualize the comparative rainfall data for 12 months in Tamil Nadu using the CSV file “rainfall.csv”.

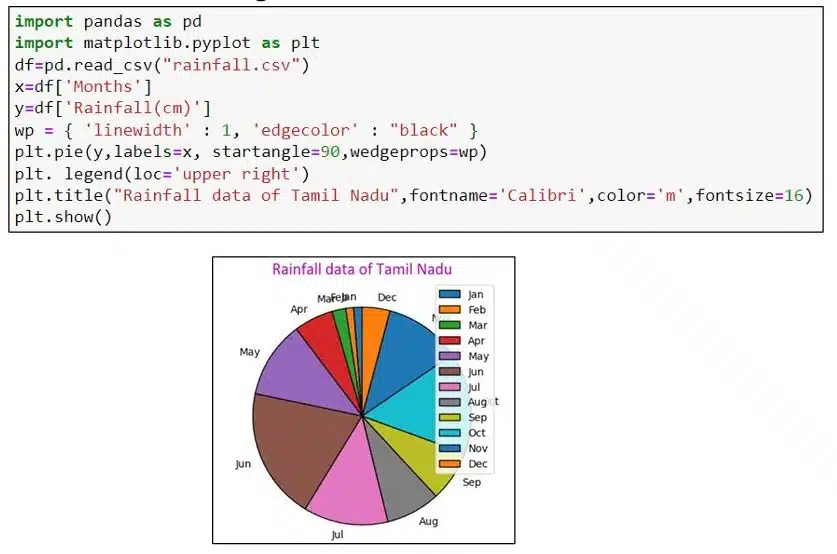

5. Pie Chart

A pie chart is a circular graph divided into segments or sections, each representing a relative proportion or percentage of the total. Pie charts are commonly used to visualize data from a small table, but it is recommended to limit the number of categories to seven to maintain clarity. However, zero values cannot be depicted in pie charts.

Python program,

Write a program to draw a pie chart to visualize the comparative rainfall data for 12 months in Tamil Nadu using the CSV file “rainfall.csv”.

Introduction to matrices

The knowledge of matrices is necessary in all branches of mathematics. Matrix is one of the most powerful tools in Mathematics. In mathematics, matrix (plural matrices) is a rectangular arrangement of numbers. The numbers are arranged in tabular form as rows and columns.

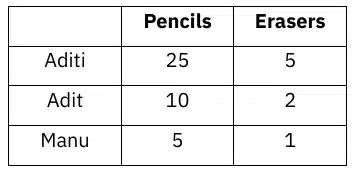

Let us understand with the help of an example: Consider

- Aditi bought 25 pencils 5 erasers

- Adit bought 10 pencils 2 erasers

- Manu bought 5 pencils 1 eraser

The above information can be arranged in tabular form as follows

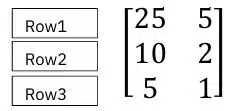

And this can be represented as

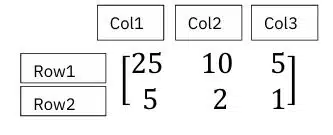

The entries in the rows represent number of pencils and erasers bought by Aditi, Adit and Manu respectively. Or in another form as

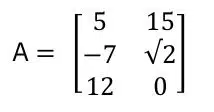

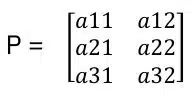

Here, the entries in the columns represent number of pencils and erasers bought by Aditi, Adit and Manu respectively. We denote matrices by capital letters, for example

Order of a matrix

A matrix has m rows and n columns. It is called a matrix of order m × n or simply m × n matrix (read as an m by n matrix). So, the matrix A in the above example is a 3 × 2 matrix. The number of elements is m x n => 3 x 2 = 6 elements. Each individual element is represented as aij where i represents row and j represents column.

Operations on Matrices

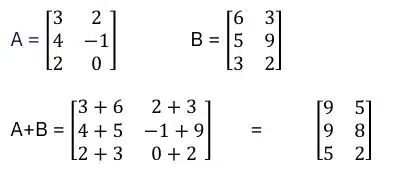

1. Addition of matrices – The sum of two matrices is obtained by adding the corresponding elements of the given matrices. Also, the two matrices have to be of the same order. Example:

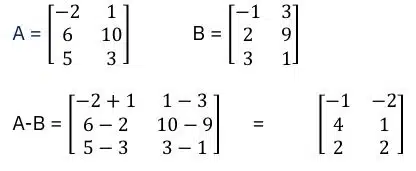

2. Difference of matrices – The difference A – B is defined as a matrix where each element is obtained by subtracting the corresponding elements (aij – bij). Matrices A and B must be of the same order. Example

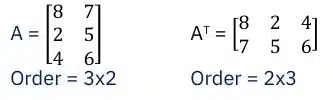

3. Transpose of a matrix

A matrix obtained by interchanging the rows and columns. Transpose of a matrix A is denoted by A’ or AT. Example

Applications of matrices in AI

Matrices are used throughout the field of machine learning for computing:

- Image Processing – Digital images can be represented using matrices. Each pixel on the image has a numerical value. These values represent the intensity of the pixels. A grayscale or black-and-white image has pixel values ranging from 0 to 255. Smaller values closer to zero represent darker shades, whereas bigger ones closer to 255 represent lighter or white shades. So, in a computer, every image is kept as a matrix of integers called a Channel.

- Recommender systems use matrices to relate between users and the purchased or viewed product(s)

- In Natural Language processing, vectors depict the distribution of a particular word in a document. Vectors are one-dimensional matrices.

Data processing

Data preprocessing is a crucial step in the machine learning process aimed at making datasets more machine learning-friendly. It involves several processes to clean, transform, reduce, integrate, and normalize data:

1. Data Cleaning

- Missing Data: Missing data occurs when values are absent from the dataset. Strategies for handling missing data include deleting rows or columns with missing values, inputting missing values with estimates, or using algorithms that can handle missing data.

- Outliers: Outliers are data points that significantly differ from the rest of the data, often due to errors or rare events. Dealing with outliers involves identifying and removing them, transforming the data, or using robust statistical methods to reduce their impact.

- Inconsistent Data: Data with typographical errors, different data types are corrected and consistency among the data is observed.

- Duplicate Data: Duplicate data will be identified and removed to ensure data integrity.

2. Data Transformation

Categorical variables are converted to Numerical variable. New features are identified, and existing features are modified if needed.

3. Data Reduction

Dimensionality reduction, i.e. reducing the number of features of data set is done. If data set is too large to handle sampling techniques are applied.

4. Data Integration and Normalization

If data is stored in multiple sources or formats, they are merged or aggregated together. Then the data is normalized to ensure that all features have a similar scale and distribution which can improve machine learning models.

5. Feature Selection

The most relevant features that contribute the most to the target variable are selected and irrelevant data are removed.

Data in modelling and evaluation

After preparing the data, the data is divided into two parts: the first is the training set (which helps to teach the model), and the other is the testing set (to check the performance). There are different types of machine learning algorithms, and the best algorithm for the data depends on the type of problem, such as classification (sorting data into categories), regression (predicting numbers), or clustering (grouping similar items).

Techniques like train-test split and cross-validation are used to ensure that the model works properly on new data. To measure the performance, there are a lot of different types of models used. For example, classification models are used for accuracy, precision, recall, and F1-score, while regression models use RMSE, MSE, MAE, and the R-squared algorithm. These algorithms are used in machine learning to make a smart decision and use technology effectively.

Disclaimer: We have taken an effort to provide you with the accurate handout of “Data Literacy Class 11 AI Notes“. If you feel that there is any error or mistake, please contact me at anuraganand2017@gmail.com. The above CBSE study material present on our websites is for education purpose, not our copyrights. All the above content and Screenshot are taken from Artificial Intelligence Class 11 CBSE Textbook and Support Material which is present in CBSEACADEMIC website, This Textbook and Support Material are legally copyright by Central Board of Secondary Education. We are only providing a medium and helping the students to improve the performances in the examination.

Images shown above are the property of individual organizations and are used here for reference purposes only.

For more information, refer to the official CBSE textbooks available at cbseacademic.nic.in