Machine Learning Algorithms Class 11 Notes – The CBSE has updated the syllabus for St. XI (Code 843). The new notes are made based on the updated syllabus and based on the updated CBSE textbook. All the important information is taken from the Artificial Intelligence Class XI Textbook Based on the CBSE Board Pattern.

Machine Learning Algorithms Class 11 Notes

Machine learning in a nutshell

Machine Learning (ML) is a part of artificial intelligence (AI) that focuses on teaching computers to learn from data and make decisions without being explicitly programmed.

Some of the important points of machine learning

- ML algorithms learn from various types of data, including images, text, sensor readings, and historical records.

- ML models identify patterns and relationships within the data to make predictions or decisions.

- Some common ML algorithms include decision trees, neural networks, and support vector machines.

- The applications of ML can have a feature for recommendation systems like those used by Netflix, speech recognition, medical diagnosis, and autonomous vehicles.

- ML algorithm is also used in chatbots, personalized ads, and fraud detection systems.

- ML transforms data into knowledge, enabling computers to learn, adapt, and make decisions autonomously.

- ML algorithm is also used in self-driving cars, fraud detection systems, personalized shopping experiences, and virtual assistants like Siri and Alexa.

Types of Machine Learning

There are three main types of machine learning: Supervised Learning, Unsupervised Learning, and Reinforcement Learning.

- Supervised Learning

- Unsupervised Learning

- Reinforcement Learning

1. Supervised Learning

Supervised learning has a powerful approach that allows machines to learn from labeled data, making predictions or decisions based on that learning. Within supervised learning, two primary types of algorithms emerge:

- Regression – works with continuous data

- Classification – works with discrete data

a. Regression

Understanding Correlation: The Foundation of Regression Analysis

Correlation is a statistical method of measurement that shows how closely related two variables are. It is a common tool for analyzing data sets. If the change in one variable appears to be accompanied by a change in the other variable, the two variables are said to be correlated, and this interdependence is called correlation.

Types of Correlation:

a. Positive Correlation: In a positive correlation, both variables move in the same direction. As one variable increases, the other also tends to increase, and vice versa.

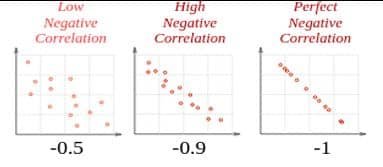

b. Negative Correlation: Conversely, in a negative correlation, variables move in opposite directions. An increase in one variable is associated with a decrease in the other, and vice versa.

c. Zero Correlation: When there is no apparent relationship between two variables, they are said to have zero correlation. Changes in one variable do not predict changes in the other.

Correlation can have a value:

- 1 is a perfect positive correlation

- 0 is no correlation (the values don’t seem linked at all)

- -1 is a perfect negative correlation

Causation

Causation indicates that one event is the result of the occurrence of the other event. Example: Since there is hot weather, the person will use more sunscreen or eat more ice cream, or smoking causes an increase in the risk of developing lung cancer, both examples having a correlation.

In some cases, causation does not have a correlation. For example, smoking is correlated with alcoholism, but it does not cause alcoholism. Therefore, we can say causation is not always correlation.

Person’s R

The Pearson correlation method is also the most common method for numerical variables. It assigns a value between -1 and 1, where 0 is no correlation, 1 is total positive correlation, and -1 is total negative correlation.

Definition: Pearson’s r measures the strength and direction of the linear relationship between two continuous variables.

The requirements when considering the use of Pearson’s correlation coefficient are:

- Scale of measurement should be interval or ratio.

- Variables should be approximately normally distributed.

- The association should be linear.

- There should be no outliers in the data.

It is important to note that, regression analysis may not be suitable in certain situations:

- No Correlation: If there is no correlation between the variables, meaning they change independently of each other, regression analysis will not provide meaningful insights or predictions.

- Non-linear Relationships: While regression can model linear relationships well, it may not capture more complex, non-linear relationships effectively. In such cases, alternative techniques like polynomial regression or non-linear regression may be more appropriate.

- Outliers: Outliers, or extreme data points, can disproportionately influence the regression model and lead to inaccurate predictions. In the presence of outliers, it is essential to assess their impact and consider alternative modeling approaches.

- Violation of Assumptions: Regression analysis relies on certain assumptions, such as the linearity of relationships and the absence of multicollinearity (high correlation between predictor variables). If these assumptions are violated, the results of the regression analysis may be unreliable.

What is regression?

Regression is a statistical technique used to model the relationship between a dependent variable and one or more independent variables. In simpler terms, regression helps us understand how changes in one or more variables are associated with changes in another variable.

What is a regression line?

A regression line is a straight line that best represents the relationship between two variables in a dataset. It is used to predict values and understand how one variable (dependent) changes when another variable (independent) changes.

Let’s see one example of predicting a person’s salary based on the year of experience using a simple linear regression using formula and regression line:

y = a + bx + e

where:

- y = Salary (dependent variable)

- x = Years of experience (independent variable)

- a = Intercept (starting salary when experience is 0)

- b = Slope (how much salary increases per year of experience)

- e = Error term

Suppose we want to find the regression equation for salary prediction, which is:

y = 30000 + 5000x

This means:

- The starting salary of an employee is Rs. 30000, where experience is 0.

- Every year, the salary increased by Rs. 5000.

Prediction:

If a person has 5 years of experience, then the predicted salary will be:

y = 30000 + (5000 x 5)

y = 30000 + 25000

y = 55000

So, the salary of the person after 5 years will be Rs. 55000.

Now, we draw a regression line, which is the best-fit straight line that shows the general trend of how salary increases with experience. The equation of this line is: y = a + bx

What is linear regression?

Linear regression is one of the most basic types of regression in machine learning. The linear regression model consists of a predictor variable and a dependent variable related linearly to each other. In case the data involves more than one independent variable, then linear regression is called multiple linear regression models.

Linear regression is further divided into two types:

- Simple Linear Regression: The dependent variable’s value is predicted using a single independent variable in simple linear regression.

- Multiple Linear Regression: In multiple linear regression, more than one independent variable is used to predict the value of the dependent variable.

Applications of Linear Regression:

- Market Analysis: Linear regression helps understand how different factors like pricing, sales quantity, advertising, and social media engagement relate to each other in the market.

- Sales Forecasting: It predicts future sales by analyzing past sales data along with factors like marketing spending, seasonal trends, and consumer behavior.

- Predicting Salary Based on Experience: Linear regression estimates a person’s salary based on their years of experience, education, and job role, aiding in recruitment and compensation planning.

- Sports Analysis: Linear regression analyzes player and team performance by considering statistics, game conditions, and opponent strength, assisting coaches and team management in decision-making.

- Medical Research: Linear regression examines relationships between factors like age, weight, and health outcomes, helping researchers identify risk factors and evaluate interventions.

Advantages of Linear regression

- Simple technique and easy to implement

- Efficient to train the machine on this model

Disadvantages of Linear regression

- Sensitivity to outliers, which can significantly impact the analysis.

- Limited to linear relationships between variables.

b. Classification

Classification is a fundamental concept in artificial intelligence and machine learning that involves categorizing data into predefined classes or categories. The main objective of classification is to assign labels to data instances based on their features or attributes.

For example, let us say, you live in a gated housing society and your society has separate dustbins for different types of waste: paper waste, plastic waste, food waste and so on. What you are basically doing over here is classifying the waste into different categories and then labeling each category.

How Classification Works

In classification tasks within machine learning, the process revolves around categorizing data into distinct groups or classes based on their features. Here is how it typically works:

- Classes or Categories: Data is divided into different classes or categories, each representing a specific outcome or group. For example, in a binary classification scenario, there are two classes: positive and negative.

- Features or Attributes: Each data instance is described by its features or attributes, which provide information about the instance. These features are crucial for the classification model to differentiate between different classes. For instance, in email classification, features might include words in the email text, sender information, and email subject.

- Training Data: The classification model is trained using a dataset known as training data. This dataset consists of labelled examples, where each data instance is associated with a class label. The model learns from this data to understand the relationship between the features and the corresponding class labels.

- Classification Model: An algorithm or technique is used to build the classification model. This model learns from the training data to predict the class labels of new, unseen data instances. It aims to generalize from the patterns and relationships in the training data to make accurate predictions.

- Prediction or Inference: Once trained, the classification model is used to predict the class labels of new data instances. This process, known as prediction or inference, relies on the learned patterns and relationships from the training data.

Types of classification

The four main types of classification are:

- Binary Classification

- Multi-Class Classification

- Multi-Label Classification

- Imbalanced Classification

| Classification Type | Binary Classification | Multi-Class Classification | Multi-Label Classification | Imbalanced Classification |

|---|---|---|---|---|

| Description | Classification tasks with two class labels. | Classification tasks with more than two class labels. | Classification tasks where each example may belong to multiple class labels. | Classification Tasks with unequally distributed class labels, typically with a majority and minority class. |

| Example | – Email spam detection – spam or not – Conversion prediction – buy or not – Medical test – cancer detected or not – Exam results – pass/fail | – Face classification – Plant species classification – Optical character recognition – Image classification into thousands of classes | – Photo classification – objects present in the photo (bicycle, apple, person, etc.) | – Fraud detection – Outlier detection – Medical diagnostic tests |

K- Nearest Neighbour algorithm (KNN)

The K-Nearest Neighbors algorithm, commonly known as KNN or k-NN, is a versatile non-parametric supervised learning technique used for both classification and regression tasks. It operates based on the principle of proximity, making predictions or classifications by considering the similarity between data points.

Why KNN Algorithm is Needed:

KNN is particularly useful when dealing with classification problems where the decision boundaries are not clearly defined or when the dataset does not have a well-defined structure. It provides a simple yet effective method for identifying the category or class of a new data point based on its similarity to existing data points.

Steps involved in k-NN

- Select the number K of the neighbors

- Calculate the Euclidean distance of K number of neighbors

- Take the K nearest neighbors as per the calculated Euclidean distance.

- Among these k neighbors, count the number of the data points in each category.

- Assign the new data points to that category for which the number of the neighbor is maximum.

- Our model is ready.

Applications of KNN:

- Image recognition and classification

- Recommendation systems

- Healthcare diagnostics

- Text mining and sentiment analysis

- Anomaly detection

Advantages of KNN:

- Easy to implement and understand.

- No explicit training phase: the model learns directly from the training data.

- Suitable for both classification and regression tasks.

- Robust to outliers and noisy data.

Limitations of KNN:

- Computationally expensive, especially for large datasets.

- Sensitivity to the choice of distance metric and the number of neighbors (K).

- Requires careful preprocessing and feature scaling.

- Not suitable for high-dimensional data due to the curse of dimensionality.

2. Unsupervised Learning

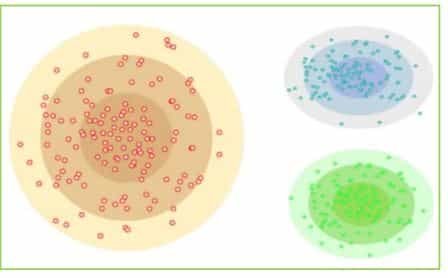

Clustering, or cluster analysis, is a machine learning technique used to group unlabeled dataset into clusters or groups based on similarity. clustering algorithms group similar data points together based on common characteristics or features. This approach helps in organizing and making sense of large datasets in various tasks, such as market segmentation, image recognition, and customer segmentation. It is an unsupervised learning method.

For example, let’s take one real-world example. Imagine you are visiting a shopping center where items are grouped together based on their similarities, like based on color or size, and this is only done with clustering.

How Clustering Works:

To cluster data effectively, follow these key steps:

- Prepare the Data: Select the right features for clustering and make sure the data is ready by scaling or transforming it as needed.

- Create Similarity Metrics: Define how similar data points are by comparing their features. This similarity measure is crucial for clustering.

- Run the Clustering Algorithm: Apply a clustering algorithm to group the data. Choose one that works well with your dataset size and characteristics.

- Interpret the Results: Analyze the clusters to understand what they represent. Since clustering is unsupervised, interpretation is essential for assessing the quality of the clusters.

Types of Clustering Methods

Some of the common clustering methods used in Machine learning are:

- Partitioning Clustering

- Density-Based Clustering

- Distribution Model-Based Clustering

- Hierarchical Clustering

1. Partitioning Clustering

It is a type of clustering that divides the data into nonhierarchical groups. It is also known as the centroid based method. The most common example of partitioning clustering is the K-Means Clustering algorithm. In this type, the dataset is divided into a set of k groups, where k is used to define the number of pre-defined groups.

2. Density-Based Clustering

The density-based clustering method connects the highly-dense areas into clusters, and the arbitrarily shaped distributions are formed as long as the dense region can be connected. This algorithm does it by identifying different clusters in the dataset and connects the areas of high densities into clusters.

3. Distribution Model-Based Clustering

In the distribution model-based clustering method, the data is divided based on the probability of how a dataset belongs to a particular distribution. The grouping is done by assuming some distributions commonly Gaussian Distribution.

4. Hierarchical Clustering

Hierarchical clustering can be used as an alternative for the partitioned clustering as there is no requirement of pre-specifying the number of clusters to be created. In this technique, the dataset is divided into clusters to create a tree-like structure, which is also called a dendrogram. The most common example of this method is the Agglomerative Hierarchical algorithm.

K- Means clustering

The k-means algorithm is one of the most popular clustering algorithms. K-Means Clustering is an unsupervised learning algorithm that is used to solve the clustering problems in machine learning or data science. It classifies the dataset by dividing the samples into different clusters of equal variances. The number of clusters must be specified in this algorithm.

Steps involved K-Means Clustering:

The working of the K-Means algorithm is explained in the below steps:

- Select the number K to decide the number of clusters.

- Select random K points or centroids. (It can be other from the input dataset).

- Assign each data point to their closest centroid, which will form the predefined K clusters.

- Calculate the variance and place a new centroid of each cluster.

- Repeat the third steps, which means reassign each data point to the new closest centroid of each cluster.

- If any reassignment occurs, then go to step-4 else go to FINISH.

- The model is ready.

Applications of K-Means Clustering:

- Market Segmentation: group customers based on similar purchasing behaviours or demographics for tailored marketing strategies.

- Image Segmentation: partition images into regions of similar colours to aid in tasks like object detection and compression.

- Document Clustering: categorize documents based on content similarity, aiding in organization and information retrieval.

- Anomaly Detection: identify outliers by clustering normal data points and detecting deviations.

- Customer Segmentation: segment customers for targeted marketing and personalized experiences.

Advantages of K-Means Clustering:

- Easy to implement, making it suitable for users of all levels.

- Handles large datasets with low computational resources.

- Works well with numerous features and data points.

- Are easy to understand, aiding in decision-making.

- Applicable across various domains and data types.

Limitations of K-Means Clustering:

- Results can vary based on initial centroid placement.

- Assumes clusters are spherical, which is not always true.

- Number of clusters must be known beforehand.

- Outliers can distort clusters due to their influence on centroids.

- May converge to suboptimal solutions instead of the global optimum.

Disclaimer: We have taken an effort to provide you with the accurate handout of “Machine Learning Algorithms Class 11 Notes“. If you feel that there is any error or mistake, please contact me at anuraganand2017@gmail.com. The above CBSE study material present on our websites is for education purpose, not our copyrights. All the above content and Screenshot are taken from Artificial Intelligence Class 11 CBSE Textbook and Support Material which is present in CBSEACADEMIC website, This Textbook and Support Material are legally copyright by Central Board of Secondary Education. We are only providing a medium and helping the students to improve the performances in the examination.

Images shown above are the property of individual organizations and are used here for reference purposes only.

For more information, refer to the official CBSE textbooks available at cbseacademic.nic.in