AI Ethics and Values Class 11 Notes – The CBSE has updated the syllabus for St. XI (Code 843). The new notes are made based on the updated syllabus and based on the updated CBSE textbook. All the important information is taken from the Artificial Intelligence Class XI Textbook Based on the CBSE Board Pattern.

AI Ethics and Values Class 11 Notes

Ethics in Artificial Intelligence

AI ethics refers to the ethical principles and guidelines that govern the design, development, and deployment of AI technologies. AI ethics aims to ensure that AI systems are developed and used in ways that are fair, transparent, accountable, and aligned with human values.

- Example 1: Suppose a CCTV camera was to spot your face in a crowd outside a sports stadium. A floating cloud in the sky causes a shadow on your face and the neural network (by mistake) finds your face similar to the face of a wanted criminal.

- Example 2: This happened in the USA in 2018. An AI system was being used to allocate care to nearly 200 million patients in the US. It was discovered later that the AI system was offering a lower standard of care to the black patients and high standard of care to white patients.

AI ethics play a crucial role in ensuring that AI technologies are developed and deployed in a manner that upholds ethical principles and respects human rights. Ethical considerations are essential in addressing issues such as bias, transparency, accountability, privacy, and the societal impact of AI.

The five pillars of AI Ethics

- Explainability: Explainability refers to the transparency and interpretability of AI systems, allowing users to understand how algorithms make decisions and predictions

- Fairness: Fairness in AI seeks to remove bias and discrimination from algorithms and decision-making models. Machine learning fairness addresses and eliminates algorithmic bias from machine learning models based on sensitive attributes like race and ethnicity, gender, sexual orientation, disability, and socioeconomic class.

- Robustness: Robustness in AI systems indeed refers to their ability to consistently provide accurate and reliable results regardless of the conditions they encounter and for extended periods. It is all about making sure that AI algorithms and systems operate as expected without any unexpected errors or deviations from their intended behavior.

- Transparency: Transparency involves openness and disclosure about the design, operation, and implications of AI systems. Transparent AI frameworks provide clear documentation, disclosure, and communication about the data, algorithms, and decision-making processes used in AI applications.

- Privacy: Privacy refers to the right of individuals to control their personal information and to be free from unwanted intrusion into their lives. It encompasses the ability to keep certain aspects of one’s life private, such as personal communications, activities, and personal data.

Bias, Bias Awareness, Sources of Bias

Bias, in simple terms, means having a preference or tendency towards something or someone over others, often without considering all the relevant information fairly. It can lead to unfair treatment or decisions based on factors like personal beliefs, past experiences, or stereotypes.

Bias awareness means understanding that AI systems might have unfair preferences because of different things like the information they were taught with, the rules they follow, or the ideas they were built upon. So, being aware of bias in AI is like knowing that sometimes AI might make unfair choices or judgments because of how it was trained or made.

AI bias, also referred to as machine learning bias or algorithm bias, refers to AI systems that produce biased results that reflect and perpetuate human biases within a society, including historical and current social inequality.

The source of bias in AI

Eliminating AI bias requires drilling down into datasets, machine learning algorithms and other elements of AI systems to identify sources of potential bias.

- Training data bias: AI systems learn to make decisions based on training data, so it is essential to assess datasets for the presence of bias. One method is to review data sampling for over- or underrepresented groups within the training data. For example, training data for a facial recognition algorithm that over-represents white people may create errors when attempting facial recognition for people of color.

- Algorithmic bias: Using flawed training data can result in algorithms that repeatedly produce errors, unfair outcomes, or even amplify the bias inherent in the flawed data. Algorithmic bias can also be caused by programming errors, such as a developer unfairly weighting factors in algorithm decision making based on their own conscious or unconscious biases. For example, indicators like income or vocabulary might be used by the algorithm to unintentionally discriminate against people of a certain race or gender.

- Cognitive bias: When people process information and make judgments, we are inevitably influenced by our experiences and our preferences. As a result, people may build these biases into AI systems through the selection of data or how the data is weighted. For example, cognitive bias could lead to favoring datasets gathered from Americans rather than sampling from a range of populations around the globe.

Examples of AI bias in real life

- Healthcare – Underrepresented data of women or minority groups can skew predictive AI algorithms. For example, computer-aided diagnosis (CAD) systems have been found to return lower accuracy results for black patients than white patients.

- Online advertising – Biases in search engine ad algorithms can reinforce job role gender bias. Independent research at Carnegie Mellon University revealed that Google’s online advertising system displayed high-paying positions to males more often than to women.

- Image generation – Academic research found bias in the generative AI art generation application Midjourney. When asked to create images of people in specialized professions, it showed both younger and older people, but the older people were always men, reinforcing gender bias of the role of women in the workplace.

Mitigating Bias in AI system

Mitigating bias in AI systems is essential for several reasons. Firstly, when AI systems have bias, they can make existing problems like unfairness and discrimination even worse. For example,

- Biased algorithms used in hiring processes may unfairly disadvantage certain groups, leading to

systemic discrimination. - Biased AI makes people trust technology less. If people don’t trust AI to make fair decisions, they might not want to use it, which can cause problems for everyone.

- Addressing bias is essential for upholding ethical principles and ensuring that AI technologies are developed and used responsibly.

Strategies for Mitigating Bias

There are several strategies and techniques for mitigating bias in AI systems:

- Using Diverse Data: To reduce bias, we should use lots of different kinds of information to teach AI. This way, the AI can learn from many different examples and viewpoints, making it less likely to be biased.

- Detecting Bias: We need ways to find and measure bias in AI systems before they are used. This could mean looking at how the AI makes decisions for different groups of people or using special tools to see if the AI is being fair.

- Fair Algorithms: We can make AI systems fairer by using special algorithms that are designed to be fair. These algorithms make sure to consider fairness when making decisions, helping to reduce bias.

- Being Transparent: It is important for AI systems to be clear and explain how they make decisions. When people understand how AI works, they can see if there is any bias and fix it.

- Inclusive Teams: When creating AI, it is helpful to have a team of people from different backgrounds and experiences. This way, they can spot biases that others might miss and make sure the AI is fair for everyone.

Developing AI policies

Developing AI policies is essential for ensuring that AI technologies are used responsibly, safely, and ethically, while also promoting innovation and public trust.

- Rules for AI should start with being good to people and respecting their rights. This means treating everyone fairly, being honest about how AI works, making sure it is safe, and being accountable if something goes wrong.

- We need clear rules and standards for how AI is used. These rules should cover important things like protecting people’s information, making sure AI does not have unfair biases, keeping it safe, and making sure people can ask questions about how AI works.

- When making these rules, it is important to talk to lots of different people. This includes government people, business leaders, scientists, community groups, and regular people. Everyone’s opinion matters because AI affects everyone.

- Before using AI, we should check to see if there are any problems or risks. This means thinking about what could go wrong and making plans to fix it.

Moral machine game

An ethical dilemma is a situation where there is no clear “right” or “wrong” decision, and any action taken may have both positive and negative consequences. An ethical dilemma in the context of artificial intelligence (AI) arises when there is a conflict between moral principles or values in the design, development, deployment, or use of AI technologies.

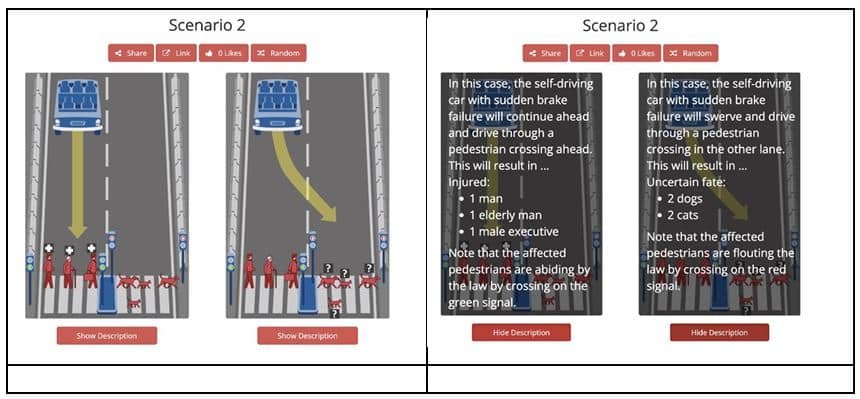

The Moral Machine, developed by researchers at the Massachusetts Institute of Technology (MIT), is an online platform designed to explore ethical dilemmas in AI through interactive decision-making scenarios. For example, imagine you are the operator of a self-driving car that encounters a situation where it must choose between swerving to avoid hitting a group of pedestrians, potentially endangering its passengers, or staying the course and risking harm to those on the road. What decision would you make? And more importantly, why?

Disclaimer: We have taken an effort to provide you with the accurate handout of “AI Ethics and Values Class 11 Notes“. If you feel that there is any error or mistake, please contact me at anuraganand2017@gmail.com. The above CBSE study material present on our websites is for education purpose, not our copyrights. All the above content and Screenshot are taken from Artificial Intelligence Class 11 CBSE Textbook and Support Material which is present in CBSEACADEMIC website, This Textbook and Support Material are legally copyright by Central Board of Secondary Education. We are only providing a medium and helping the students to improve the performances in the examination.

Images shown above are the property of individual organizations and are used here for reference purposes only.

For more information, refer to the official CBSE textbooks available at cbseacademic.nic.in